In our previous discussions (1, 2, 3), we’ve explored the intricate relationship between cognitive biases and algorithms. We’ve uncovered how algorithms, instead of reflecting our true preferences, often mirror our subconscious biases. This journey has illuminated the subtle ways in which our automatic choices inadvertently influence the algorithms that shape our online world.

User Behavior Insights Across Major Digital Platforms

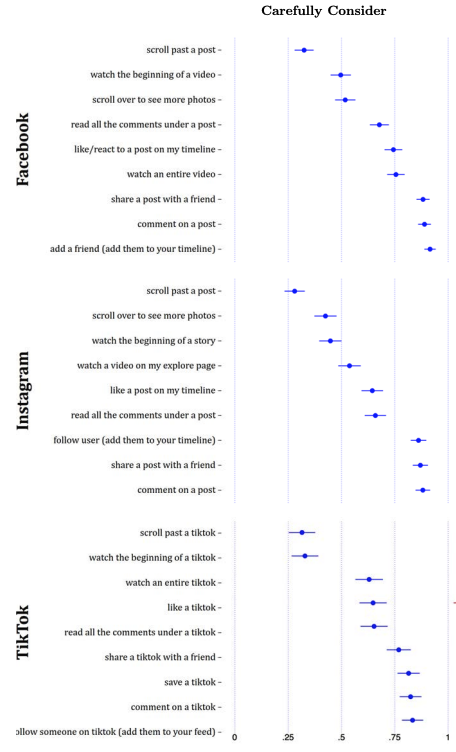

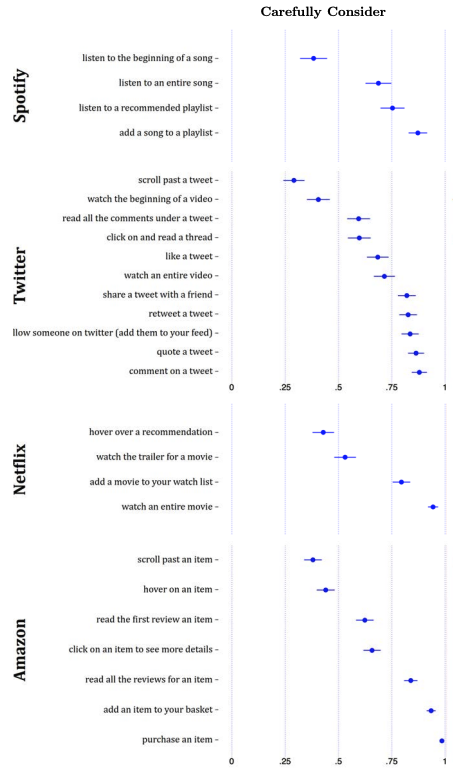

In their study, Agan et al. (2023) conducted a survey exploring user behavior on major digital platforms, encompassing social media giants like X (Twitter) and TikTok, streaming services such as Spotify and Netflix, and the e-commerce leader, Amazon.

Their research, illustrated in the following figures, highlights how user engagement varies with specific features within platforms.

On TikTok, scrolling through posts is identified as the most automatic, or subconscious behavior, while deciding to follow someone is highlighted as a more deliberate action, requiring greater cognitive involvement.

This pattern is mirrored on Amazon, where browsing through products is a more automatic behavior compared to the more deliberate action of making a purchase.

(Source: Agan, Amanda Y., et al. Automating automaticity: How the context of human choice affects the extent of algorithmic bias. No. w30981. National Bureau of Economic Research, 2023)

The Gap in Algorithm Design

Agan et al. (2023) pose a pivotal question: Do recommendation algorithms on platforms like TikTok and Twitter effectively account for the varying degrees of automaticity in user interactions across different features?

The New York Times reports that TikTok’s algorithm relies on a weighted average of predictive factors, such as likes, comments, and video play durations. Twitter has open-sourced the precise weights it uses, also employing a method of a weighted combination of behavioral predictions.

According to The New York Times, TikTok’s algorithm operates on a weighted average of various predictive factors, including likes, comments, and the length of video engagement. Twitter has transparently disclosed the specific weights it applies, utilizing a method that also hinges on a weighted amalgamation of indicators.

However, a critical aspect seems overlooked in these methodologies: the subtleties of automaticity in user behaviors. Such an oversight might lead to a significant misalignment, where algorithmic recommendations fail to align with users’ actual preferences. This gap between user behavior and algorithmic interpretation underlines the need for a more nuanced understanding of user engagement in the realm of digital platforms.

Enhancing Algorithms with Explicit User Preferences

Agan et al. (2023) propose that platforms should actively engage in collecting explicit preference data from users through surveys. This strategy is pivotal for developing algorithms that more accurately reflect genuine user interests.

By capturing explicit preferences, platforms can better distinguish between content that users truly value and content that merely triggers automatic behavioral responses. Such rich data can significantly enhance the algorithm’s ability to discern where user behavior systematically diverges from their actual preferences.

Integrating Psychological Insights into Algorithm Design

The study further recommends that platforms to adopt psychological survey methods to accurately measure the automaticity present in diverse user behaviors. This approach involves gathering data on each behavior that feeds into the algorithm.

By incorporating this rich insight into the automatic nature of user actions, algorithms can be finely tuned for greater precision. Such calibration allows for recommendations that resonate more with the users’ considered choices, rather than impulsive reactions. This strategy ensures a closer alignment of algorithms with the genuine preferences of users, enhancing the relevance and value of the content they encounter.

Selecting Optimal Weights in Recommender Systems

As we continue to unravel the complexities of algorithmic decision-making, a pivotal question remains: How can platforms optimally select weights for their algorithms?

In my upcoming blog post, ‘Choosing the Right Weights in Algorithm,’ I’ll delve into the insightful study by Smitha Milli, Emma Pierson, and Nikhil Garg from Cornell Tech. Their research paper, ‘Choosing the Right Weights: Balancing Value, Strategy, and Noise in Recommender Systems,’ provides an in-depth analysis of how optimal weight choices can be made from both user and platform perspectives.

Stay tuned for an engaging review that promises to shed light on optimizing algorithmic systems, a critical step towards more accurately reflecting user preferences in the digital world!